Awesome Mixup Methods for Supervised Learning¶

We summarize fundamental mixup methods proposed for supervised visual representation learning from two aspects: sample mixup policy and label mixup policy. Then, we summarize mixup techniques used in downstream tasks. The list of awesome mixup methods is summarized in chronological order and is on updating. And we will add more papers according to Awesome-Mix.

To find related papers and their relationships, check out Connected Papers, which visualizes the academic field in a graph representation.

To export BibTeX citations of papers, check out ArXiv or Semantic Scholar of the paper for professional reference formats.

Table of Contents¶

Sample Mixup Methods¶

Pre-defined Policies¶

mixup: Beyond Empirical Risk Minimization

Hongyi Zhang, Moustapha Cisse, Yann N. Dauphin, David Lopez-Paz

ICLR’2018 [Paper] [Code]MixUp Framework

Between-class Learning for Image Classification

Yuji Tokozume, Yoshitaka Ushiku, Tatsuya Harada

CVPR’2018 [Paper] [Code]BC Framework

MixUp as Locally Linear Out-Of-Manifold Regularization

Hongyu Guo, Yongyi Mao, Richong Zhang

AAAI’2019 [Paper]AdaMixup Framework

CutMix: Regularization Strategy to Train Strong Classifiers with Localizable Features

Sangdoo Yun, Dongyoon Han, Seong Joon Oh, Sanghyuk Chun, Junsuk Choe, Youngjoon Yoo

ICCV’2019 [Paper] [Code]CutMix Framework

Manifold Mixup: Better Representations by Interpolating Hidden States

Vikas Verma, Alex Lamb, Christopher Beckham, Amir Najafi, Ioannis Mitliagkas, David Lopez-Paz, Yoshua Bengio

ICML’2019 [Paper] [Code]ManifoldMix Framework

Improved Mixed-Example Data Augmentation

Cecilia Summers, Michael J. Dinneen

WACV’2019 [Paper] [Code]MixedExamples Framework

FMix: Enhancing Mixed Sample Data Augmentation

Ethan Harris, Antonia Marcu, Matthew Painter, Mahesan Niranjan, Adam Prügel-Bennett, Jonathon Hare

Arixv’2020 [Paper] [Code]FMix Framework

SmoothMix: a Simple Yet Effective Data Augmentation to Train Robust Classifiers

Jin-Ha Lee, Muhammad Zaigham Zaheer, Marcella Astrid, Seung-Ik Lee

CVPRW’2020 [Paper] [Code]SmoothMix Framework

PatchUp: A Regularization Technique for Convolutional Neural Networks

Mojtaba Faramarzi, Mohammad Amini, Akilesh Badrinaaraayanan, Vikas Verma, Sarath Chandar

Arxiv’2020 [Paper] [Code]PatchUp Framework

GridMix: Strong regularization through local context mapping

Kyungjune Baek, Duhyeon Bang, Hyunjung Shim

Pattern Recognition’2021 [Paper] [Code]GridMixup Framework

ResizeMix: Mixing Data with Preserved Object Information and True Labels

Jie Qin, Jiemin Fang, Qian Zhang, Wenyu Liu, Xingang Wang, Xinggang Wang

Arixv’2020 [Paper] [Code]ResizeMix Framework

Where to Cut and Paste: Data Regularization with Selective Features

Jiyeon Kim, Ik-Hee Shin, Jong-Ryul, Lee, Yong-Ju Lee

ICTC’2020 [Paper] [Code]FocusMix Framework

AugMix: A Simple Data Processing Method to Improve Robustness and Uncertainty

Dan Hendrycks, Norman Mu, Ekin D. Cubuk, Barret Zoph, Justin Gilmer, Balaji Lakshminarayanan

ICLR’2020 [Paper] [Code]AugMix Framework

DJMix: Unsupervised Task-agnostic Augmentation for Improving Robustness

Ryuichiro Hataya, Hideki Nakayama

Arxiv’2021 [Paper]DJMix Framework

PixMix: Dreamlike Pictures Comprehensively Improve Safety Measures

Dan Hendrycks, Andy Zou, Mantas Mazeika, Leonard Tang, Bo Li, Dawn Song, Jacob Steinhardt

Arxiv’2021 [Paper] [Code]PixMix Framework

StyleMix: Separating Content and Style for Enhanced Data Augmentation

Minui Hong, Jinwoo Choi, Gunhee Kim

CVPR’2021 [Paper] [Code]StyleMix Framework

Domain Generalization with MixStyle

Kaiyang Zhou, Yongxin Yang, Yu Qiao, Tao Xiang

ICLR’2021 [Paper] [Code]MixStyle Framework

On Feature Normalization and Data Augmentation

Boyi Li, Felix Wu, Ser-Nam Lim, Serge Belongie, Kilian Q. Weinberger

CVPR’2021 [Paper] [Code]MoEx Framework

Guided Interpolation for Adversarial Training

Chen Chen, Jingfeng Zhang, Xilie Xu, Tianlei Hu, Gang Niu, Gang Chen, Masashi Sugiyama

ArXiv’2021 [Paper]GIF Framework

Observations on K-image Expansion of Image-Mixing Augmentation for Classification

Joonhyun Jeong, Sungmin Cha, Youngjoon Yoo, Sangdoo Yun, Taesup Moon, Jongwon Choi

IEEE Access’2021 [Paper] [Code]DCutMix Framework

Noisy Feature Mixup

Soon Hoe Lim, N. Benjamin Erichson, Francisco Utrera, Winnie Xu, Michael W. Mahoney

ICLR’2022 [Paper] [Code]NFM Framework

Preventing Manifold Intrusion with Locality: Local Mixup

Raphael Baena, Lucas Drumetz, Vincent Gripon

EUSIPCO’2022 [Paper] [Code]LocalMix Framework

RandomMix: A mixed sample data augmentation method with multiple mixed modes

Xiaoliang Liu, Furao Shen, Jian Zhao, Changhai Nie

ArXiv’2022 [Paper]RandomMix Framework

SuperpixelGridCut, SuperpixelGridMean and SuperpixelGridMix Data Augmentation

Karim Hammoudi, Adnane Cabani, Bouthaina Slika, Halim Benhabiles, Fadi Dornaika, Mahmoud Melkemi

ArXiv’2022 [Paper] [Code]SuperpixelGridCut Framework

AugRmixAT: A Data Processing and Training Method for Improving Multiple Robustness and Generalization Performance

Xiaoliang Liu, Furao Shen, Jian Zhao, Changhai Nie

ICME’2022 [Paper]AugRmixAT Framework

A Unified Analysis of Mixed Sample Data Augmentation: A Loss Function Perspective

Chanwoo Park, Sangdoo Yun, Sanghyuk Chun

NIPS’2022 [Paper] [Code]MSDA Framework

RegMixup: Mixup as a Regularizer Can Surprisingly Improve Accuracy and Out Distribution Robustness

Francesco Pinto, Harry Yang, Ser-Nam Lim, Philip H.S. Torr, Puneet K. Dokania

NIPS’2022 [Paper] [Code]RegMixup Framework

Saliency-guided Policies¶

SaliencyMix: A Saliency Guided Data Augmentation Strategy for Better Regularization

A F M Shahab Uddin and Mst. Sirazam Monira and Wheemyung Shin and TaeChoong Chung and Sung-Ho Bae

ICLR’2021 [Paper] [Code]SaliencyMix Framework

Attentive CutMix: An Enhanced Data Augmentation Approach for Deep Learning Based Image Classification

Devesh Walawalkar, Zhiqiang Shen, Zechun Liu, Marios Savvides

ICASSP’2020 [Paper] [Code]AttentiveMix Framework

SnapMix: Semantically Proportional Mixing for Augmenting Fine-grained Data

Shaoli Huang, Xinchao Wang, Dacheng Tao

AAAI’2021 [Paper] [Code]SnapMix Framework

Attribute Mix: Semantic Data Augmentation for Fine Grained Recognition

Hao Li, Xiaopeng Zhang, Hongkai Xiong, Qi Tian

VCIP’2020 [Paper]AttributeMix Framework

On Adversarial Mixup Resynthesis

Christopher Beckham, Sina Honari, Vikas Verma, Alex Lamb, Farnoosh Ghadiri, R Devon Hjelm, Yoshua Bengio, Christopher Pal

NIPS’2019 [Paper] [Code]AMR Framework

Patch-level Neighborhood Interpolation: A General and Effective Graph-based Regularization Strategy

Ke Sun, Bing Yu, Zhouchen Lin, Zhanxing Zhu

ArXiv’2019 [Paper]Pani VAT Framework

AutoMix: Mixup Networks for Sample Interpolation via Cooperative Barycenter Learning

Jianchao Zhu, Liangliang Shi, Junchi Yan, Hongyuan Zha

ECCV’2020 [Paper]AutoMix Framework

PuzzleMix: Exploiting Saliency and Local Statistics for Optimal Mixup

Jang-Hyun Kim, Wonho Choo, Hyun Oh Song

ICML’2020 [Paper] [Code]PuzzleMix Framework

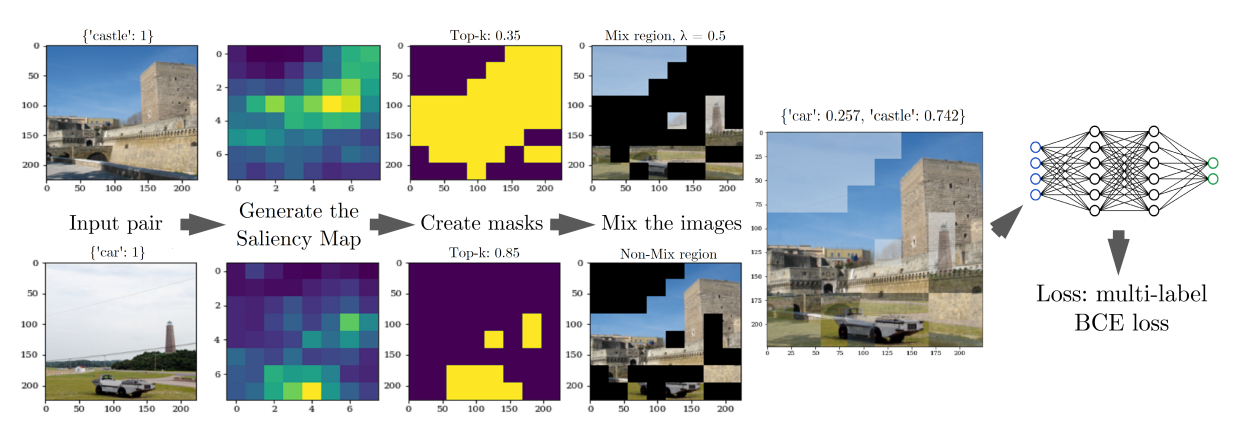

Co-Mixup: Saliency Guided Joint Mixup with Supermodular Diversity

Jang-Hyun Kim, Wonho Choo, Hosan Jeong, Hyun Oh Song

ICLR’2021 [Paper] [Code]Co-Mixup Framework

SuperMix: Supervising the Mixing Data Augmentation

Ali Dabouei, Sobhan Soleymani, Fariborz Taherkhani, Nasser M. Nasrabadi

CVPR’2021 [Paper] [Code]SuperMix Framework

Evolving Image Compositions for Feature Representation Learning

Paola Cascante-Bonilla, Arshdeep Sekhon, Yanjun Qi, Vicente Ordonez

BMVC’2021 [Paper]PatchMix Framework

StackMix: A complementary Mix algorithm

John Chen, Samarth Sinha, Anastasios Kyrillidis

UAI’2022 [Paper]StackMix Framework

SalfMix: A Novel Single Image-Based Data Augmentation Technique Using a Saliency Map

Jaehyeop Choi, Chaehyeon Lee, Donggyu Lee, Heechul Jung

Sensor’2021 [Paper]SalfMix Framework

k-Mixup Regularization for Deep Learning via Optimal Transport

Kristjan Greenewald, Anming Gu, Mikhail Yurochkin, Justin Solomon, Edward Chien

ArXiv’2021 [Paper]k-Mixup Framework

AlignMix: Improving representation by interpolating aligned features

Shashanka Venkataramanan, Ewa Kijak, Laurent Amsaleg, Yannis Avrithis

CVPR’2022 [Paper] [Code]AlignMix Framework

AutoMix: Unveiling the Power of Mixup for Stronger Classifiers

Zicheng Liu, Siyuan Li, Di Wu, Zihan Liu, Zhiyuan Chen, Lirong Wu, Stan Z. Li

ECCV’2022 [Paper] [Code]AutoMix Framework

Boosting Discriminative Visual Representation Learning with Scenario-Agnostic Mixup

Siyuan Li, Zicheng Liu, Di Wu, Zihan Liu, Stan Z. Li

Arxiv’2021 [Paper] [Code]SAMix Framework

ScoreNet: Learning Non-Uniform Attention and Augmentation for Transformer-Based Histopathological Image Classification

Thomas Stegmüller, Behzad Bozorgtabar, Antoine Spahr, Jean-Philippe Thiran

Arxiv’2022 [Paper]ScoreMix Framework

RecursiveMix: Mixed Learning with History

Lingfeng Yang, Xiang Li, Borui Zhao, Renjie Song, Jian Yang

NIPS’2022 [Paper] [Code]RecursiveMix Framework

Expeditious Saliency-guided Mix-up through Random Gradient Thresholding

Remy Sun, Clement Masson, Gilles Henaff, Nicolas Thome, Matthieu Cord.

ICPR’2022 [Paper]SciMix Framework

TransformMix: Learning Transformation and Mixing Strategies for Sample-mixing Data Augmentation

Tsz-Him Cheung, Dit-Yan Yeung.<\br> OpenReview’2023 [Paper]TransformMix Framework

GuidedMixup: An Efficient Mixup Strategy Guided by Saliency Maps

Minsoo Kang, Suhyun Kim

AAAI’2023 [Paper]GuidedMixup Framework

MixPro: Data Augmentation with MaskMix and Progressive Attention Labeling for Vision Transformer

Qihao Zhao, Yangyu Huang, Wei Hu, Fan Zhang, Jun Liu

ICLR’2023 [Paper]MixPro Framework

Expeditious Saliency-guided Mix-up through Random Gradient Thresholding

Minh-Long Luu, Zeyi Huang, Eric P.Xing, Yong Jae Lee, Haohan Wang

2nd Practical-DL Workshop @ AAAI’23 [Paper] [Code]R-Mix and R-LMix Framework

SMMix: Self-Motivated Image Mixing for Vision Transformers

Mengzhao Chen, Mingbao Lin, ZhiHang Lin, Yuxin Zhang, Fei Chao, Rongrong Ji

ICCV’2023 [Paper] [Code]SMMix Framework

Teach me how to Interpolate a Myriad of Embeddings

Shashanka Venkataramanan, Ewa Kijak, Laurent Amsaleg, Yannis Avrithis

Arxiv’2022 [Paper]MultiMix Framework

GradSalMix: Gradient Saliency-Based Mix for Image Data Augmentation

Tao Hong, Ya Wang, Xingwu Sun, Fengzong Lian, Zhanhui Kang, Jinwen Ma

ICME’2023 [Paper]GradSalMix Framework

Label Mixup Methods¶

mixup: Beyond Empirical Risk Minimization

Hongyi Zhang, Moustapha Cisse, Yann N. Dauphin, David Lopez-Paz

ICLR’2018 [Paper] [Code]CutMix: Regularization Strategy to Train Strong Classifiers with Localizable Features

Sangdoo Yun, Dongyoon Han, Seong Joon Oh, Sanghyuk Chun, Junsuk Choe, Youngjoon Yoo

ICCV’2019 [Paper] [Code]Metamixup: Learning adaptive interpolation policy of mixup with metalearning

Zhijun Mai, Guosheng Hu, Dexiong Chen, Fumin Shen, Heng Tao Shen

TNNLS’2021 [Paper]MetaMixup Framework

Mixup Without Hesitation

Hao Yu, Huanyu Wang, Jianxin Wu

ICIG’2022 [Paper] [Code]Combining Ensembles and Data Augmentation can Harm your Calibration

Yeming Wen, Ghassen Jerfel, Rafael Muller, Michael W. Dusenberry, Jasper Snoek, Balaji Lakshminarayanan, Dustin Tran

ICLR’2021 [Paper] [Code]CAMixup Framework

Combining Ensembles and Data Augmentation can Harm your Calibration

Zihang Jiang, Qibin Hou, Li Yuan, Daquan Zhou, Yujun Shi, Xiaojie Jin, Anran Wang, Jiashi Feng

NIPS’2021 [Paper] [Code]TokenLabeling Framework

Saliency Grafting: Innocuous Attribution-Guided Mixup with Calibrated Label Mixing

Joonhyung Park, June Yong Yang, Jinwoo Shin, Sung Ju Hwang, Eunho Yang

AAAI’2022 [Paper]Saliency Grafting Framework

TransMix: Attend to Mix for Vision Transformers

Jie-Neng Chen, Shuyang Sun, Ju He, Philip Torr, Alan Yuille, Song Bai

CVPR’2022 [Paper] [Code]TransMix Framework

GenLabel: Mixup Relabeling using Generative Models

Jy-yong Sohn, Liang Shang, Hongxu Chen, Jaekyun Moon, Dimitris Papailiopoulos, Kangwook Lee

ArXiv’2022 [Paper]GenLabel Framework

Decoupled Mixup for Data-efficient Learning

Zicheng Liu, Siyuan Li, Ge Wang, Cheng Tan, Lirong Wu, Stan Z. Li

NIPS’2023 [Paper] [Code]DecoupleMix Framework

TokenMix: Rethinking Image Mixing for Data Augmentation in Vision Transformers

Jihao Liu, Boxiao Liu, Hang Zhou, Hongsheng Li, Yu Liu

ECCV’2022 [Paper] [Code]TokenMix Framework

Optimizing Random Mixup with Gaussian Differential Privacy

Donghao Li, Yang Cao, Yuan Yao

arXiv’2022 [Paper]TokenMixup: Efficient Attention-guided Token-level Data Augmentation for Transformers

Hyeong Kyu Choi, Joonmyung Choi, Hyunwoo J. Kim

NIPS’2022 [Paper] [Code]TokenMixup Framework

Token-Label Alignment for Vision Transformers

Han Xiao, Wenzhao Zheng, Zheng Zhu, Jie Zhou, Jiwen Lu

arXiv’2022 [Paper] [Code]TL-Align Framework

LUMix: Improving Mixup by Better Modelling Label Uncertainty

Shuyang Sun, Jie-Neng Chen, Ruifei He, Alan Yuille, Philip Torr, Song Bai

arXiv’2022 [Paper] [Code]LUMix Framework

MixupE: Understanding and Improving Mixup from Directional Derivative Perspective

Vikas Verma, Sarthak Mittal, Wai Hoh Tang, Hieu Pham, Juho Kannala, Yoshua Bengio, Arno Solin, Kenji Kawaguchi

arXiv’2022 [Paper]MixupE Framework

Infinite Class Mixup

Thomas Mensink, Pascal Mettes

arXiv’2023 [Paper]IC-Mixup Framework

Semantic Equivariant Mixup

Zongbo Han, Tianchi Xie, Bingzhe Wu, Qinghua Hu, Changqing Zhang

arXiv’2023 [Paper]SEM Framework

RankMixup: Ranking-Based Mixup Training for Network Calibration

Jongyoun Noh, Hyekang Park, Junghyup Lee, Bumsub Ham

ICCV’2023 [Paper] [Code]RankMixup Framework

G-Mix: A Generalized Mixup Learning Framework Towards Flat Minima

Xingyu Li, Bo Tang

arXiv’2023 [Paper]G-Mix Framework

Analysis of Mixup¶

Sunil Thulasidasan, Gopinath Chennupati, Jeff Bilmes, Tanmoy Bhattacharya, Sarah Michalak.

On Mixup Training: Improved Calibration and Predictive Uncertainty for Deep Neural Networks. [NIPS’2019] [code]

Framework

Luigi Carratino, Moustapha Cissé, Rodolphe Jenatton, Jean-Philippe Vert.

On Mixup Regularization. [ArXiv’2020]

Framework

Linjun Zhang, Zhun Deng, Kenji Kawaguchi, Amirata Ghorbani, James Zou.

How Does Mixup Help With Robustness and Generalization? [ICLR’2021]

Framework

Muthu Chidambaram, Xiang Wang, Yuzheng Hu, Chenwei Wu, Rong Ge.

Framework

Linjun Zhang, Zhun Deng, Kenji Kawaguchi, James Zou.

When and How Mixup Improves Calibration. [ICML’2022]

Framework

Zixuan Liu, Ziqiao Wang, Hongyu Guo, Yongyi Mao.

Over-Training with Mixup May Hurt Generalization. [ICLR’2023]

Framework

Junsoo Oh, Chulhee Yun.

Provable Benefit of Mixup for Finding Optimal Decision Boundaries. [ICML’2023]

Deng-Bao Wang, Lanqing Li, Peilin Zhao, Pheng-Ann Heng, Min-Ling Zhang.

On the Pitfall of Mixup for Uncertainty Calibration. [CVPR’2023]

Hongjun Choi, Eun Som Jeon, Ankita Shukla, Pavan Turaga.

Soyoun Won, Sung-Ho Bae, Seong Tae Kim.

Analyzing Effects of Mixed Sample Data Augmentation on Model Interpretability. [arXiv’2023]

Survey¶

A survey on Image Data Augmentation for Deep Learning

Connor Shorten and Taghi Khoshgoftaar

Journal of Big Data’2019 [Paper]Survey: Image Mixing and Deleting for Data Augmentation

Humza Naveed, Saeed Anwar, Munawar Hayat, Kashif Javed, Ajmal Mian

ArXiv’2021 [Paper] [Code]An overview of mixing augmentation methods and augmentation strategies

Dominik Lewy and Jacek Ma ́ndziuk

Artificial Intelligence Review’2022 [Paper]Image Data Augmentation for Deep Learning: A Survey

Suorong Yang, Weikang Xiao, Mengcheng Zhang, Suhan Guo, Jian Zhao, Furao Shen

ArXiv’2022 [Paper]A Survey of Mix-based Data Augmentation: Taxonomy, Methods, Applications, and Explainability

Chengtai Cao, Fan Zhou, Yurou Dai, Jianping Wang

ArXiv’2022 [Paper] [Code]

Contribution¶

Feel free to send pull requests to add more links with the following Markdown format. Notice that the Abbreviation, the code link, and the figure link are optional attributes. Current contributors include: Siyuan Li (@Lupin1998) and Zicheng Liu (@pone7).

* **TITLE**<br>

*AUTHER*<br>

PUBLISH'YEAR [[Paper](link)] [[Code](link)]

<details close>

<summary>ABBREVIATION Framework</summary>

<p align="center"><img width="90%" src="link_to_image" /></p>

</details>